Live Monitoring¶

Once the model training is complete, deploying the model to accept real time data as input and generate model output for that data is Live Monitoring.

You can find information on using APIs for live monitoring in the live monitoring API guide.

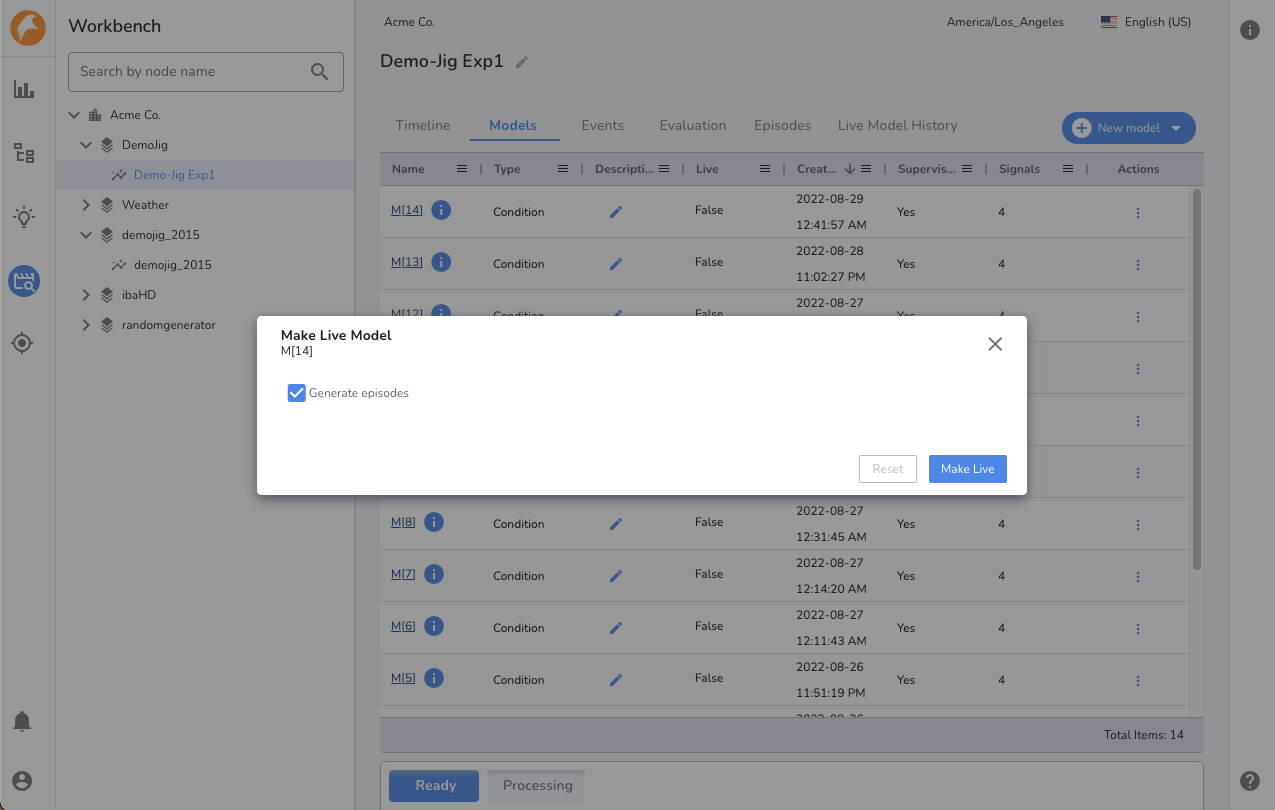

Make a Model Live¶

To make a model live for an assessment go to the Models view and follow the steps below:

- 1. Pick the desired model from the list and click Make Live action.

- 2. Select Generate Episodes option and click Make Live button to start the STARTLIVEMODEL activity.

- Track the activity within the Activity view and wait for this activity to complete.

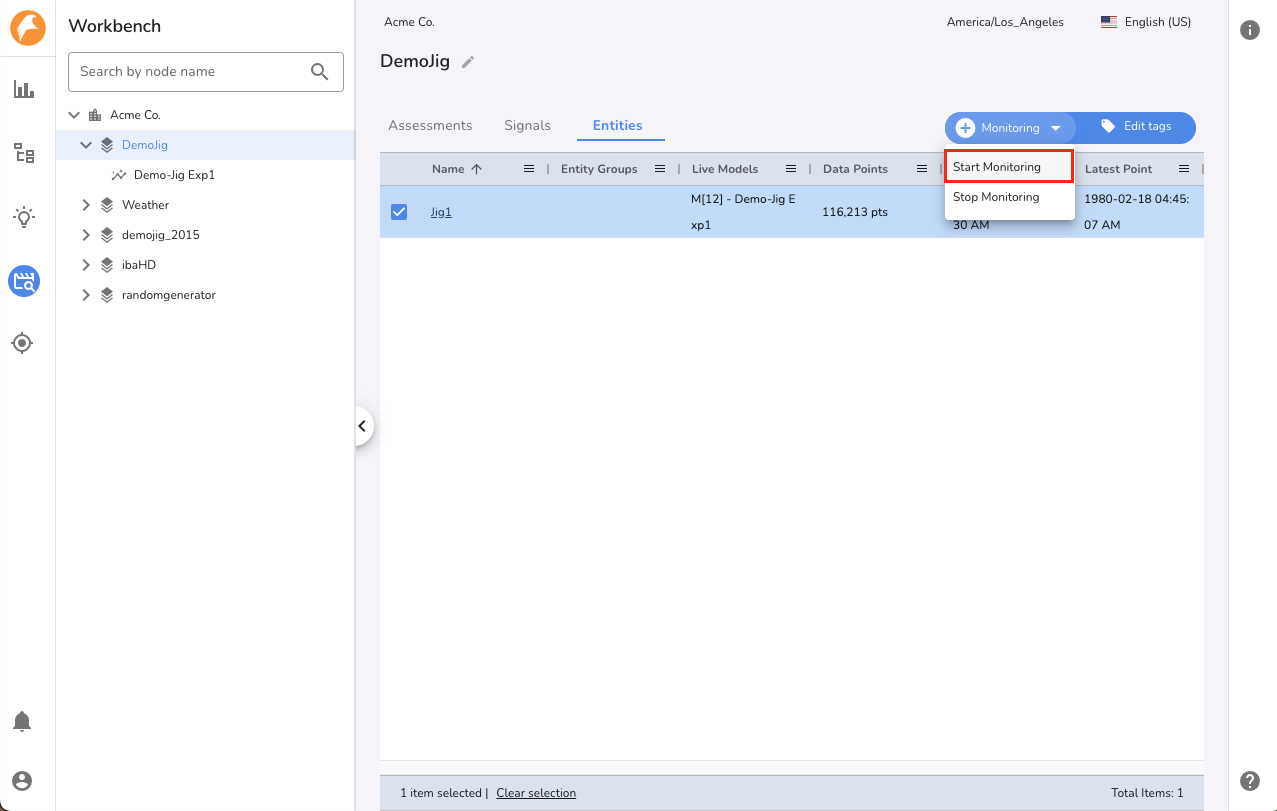

Make an Entity Live¶

After a model is live, it is required to start live monitoring for the entities that are part of the datastream. Follow the steps below:

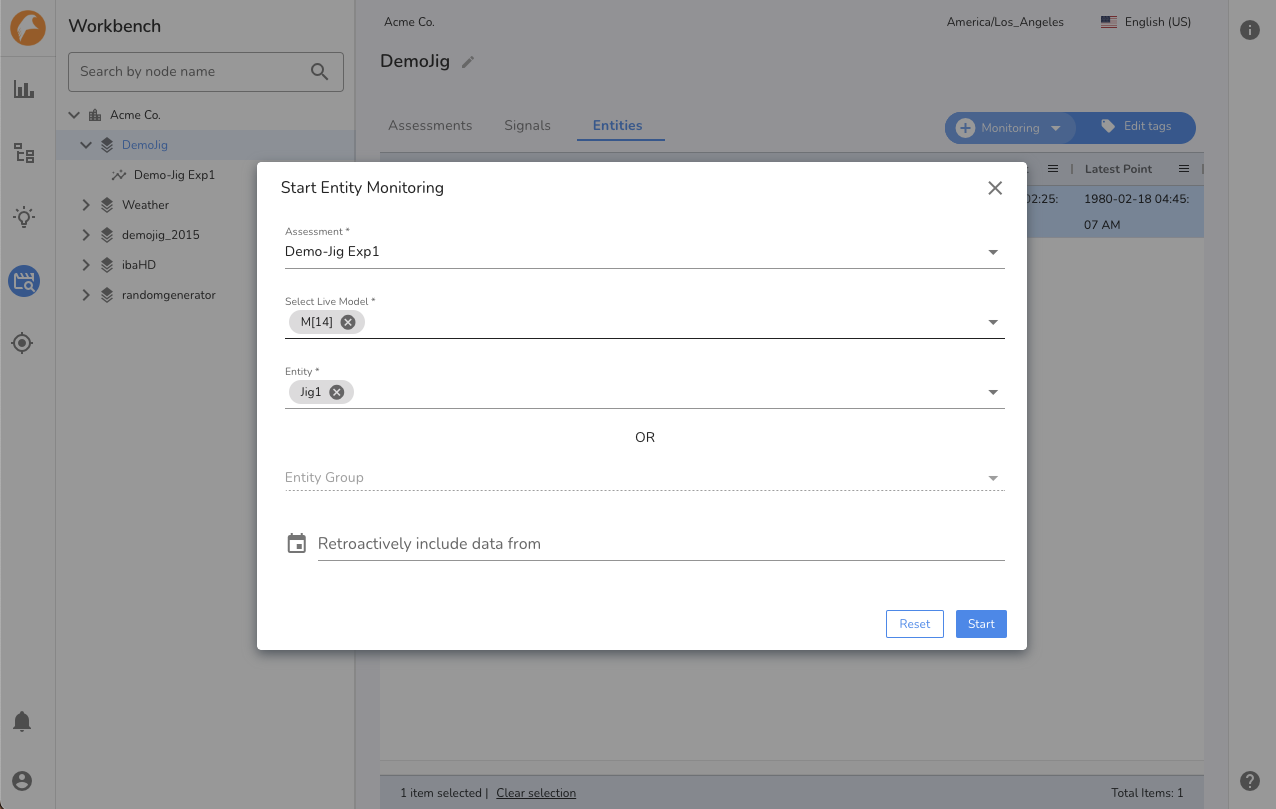

- 1. Go to Entities view within the datastream. Select the entities to start live monitoring and click Start Monitoring action.

- 2. Select a live model and click Start button.

Note

This starts live monitoring for the entities accepting real time data to produce output.

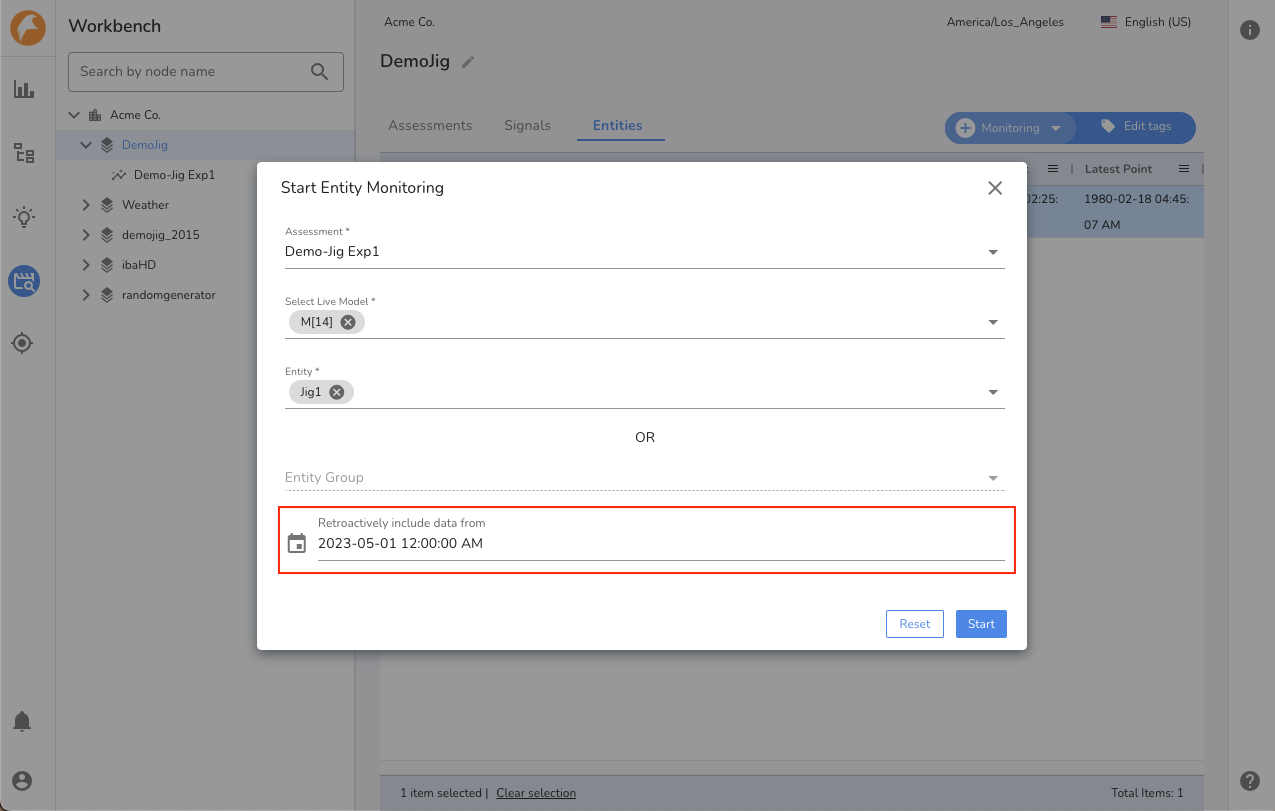

Start live monitoring from a point in time¶

Warning

Use this option only if live model stopped producing model output because of data or system disruptions.

To produce model output on a past time range while starting the live monitoring, it is advised to set Retroactively include data from option while starting the entity monitoring. This time option must be set to the time since you want the live monitoring to start processing data. It is recommended to not set this time option beyond 30 days in past.